Corpus collection is the first step before you even think of Machine Learning and Linguistics. While there are some serious concerted efforts and progress made in different languages to compile and publish Languages Corpora, Urdu Language is no where to be seen in this context. Why? this warrants a separate detailed post, which I will inshaAllah write some time in near future.

In this post, I want to share my first hand experience of collecting a sizable raw Urdu Plain Text from Open Source Wikipedia, so not only I can use it in my research, but will also be able to publish it under Open Source License for others to benefit from it.

Wikipedia publishes complete database backup dumps of

Wikipedia publishes complete database backup dumps of

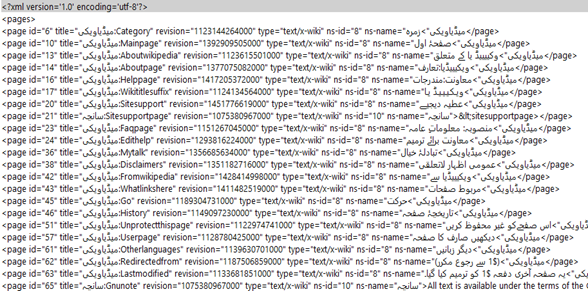

“all Wikimedia wikis, in the form of wikitext source and metadata embedded in XML. A number of raw database tables in SQL form are also available”. “These snapshots are provided at the very least monthly and usually twice a month.”

As I was interested in Wikipedia Urdu, I downloaded following two files from the Wikipedia Urdu Database Backup Dumps page:

Next task was to extract actual page content from these dump files which follows a specific schema. Wikipedia has a comprehensive list of open source parsers written in different programming languages and published under different types of open source licenses. Because my goal was to collect open source Urdu Plain “Text” and my programming language choice was “Java”, I opt for Wikiforia.

“Wikiforia is a library and a tool for parsing Wikipedia XML dumps and converting them into plain text for other tools to use.”

So, I began by cloning the Wikiforia github repository locally on my laptop and then ran following command on the terminal:

java -jar wikiforia-1.2.1.jar

-pages urwiki-20160501-pages-articles-multistream.xml.bz2

-output output.xml

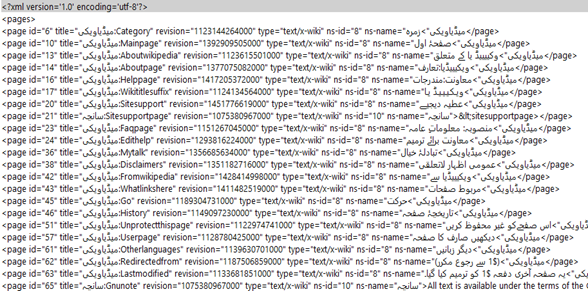

The program worked perfectly and uses concurrency (one thread per logical cores) to speed up the processing. It took few minutes to complete the task, however the output was not pure plain text, in fact it was a simplified form of XML and looks like:

At that time I had two options:

- Run another tool to convert this output file from XML to Plain Text, or

- Add custom implementation to Wikiforia to make it output pure Plain Text

I opt for second one for two reasons, first I don’t want to waste additional time and processor cycles to process the generated output once again, secondly I thought that there might be others who will benefit from this modification.

So I forked Wikiforia and add a new Sink Implementation PlainTextWikipediaPageWriter.java. I also had to modify the main program “App.java”, to add CLI support for additional switch “outputformat” with a sensible default set to “xml”, with only two possible values (for now) “xml” and “plain-text”. And once I did that, I also submitted the “Pull Request” on the Wikiforia github repository, in case they decided to merge the patch on to the original repository.

Then, I ran following modified command to extract the “Plain Text” out from the Wikipedia Urdu Database Dumps:

java -jar wikiforia-1.2.1.jar

-pages urwiki-20160501-pages-articles-multistream.xml.bz2

-output output.txt

-outputformat plain-text

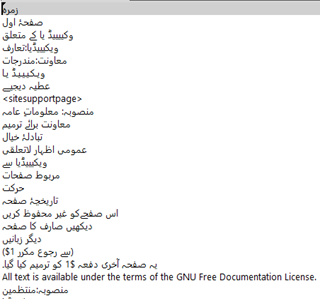

And here’s how plain text output.txt looks like:

Finally alhamdolillah! I made my first contribution to the Urdu Corpus Community Project.

Wikipedia publishes

Wikipedia publishes